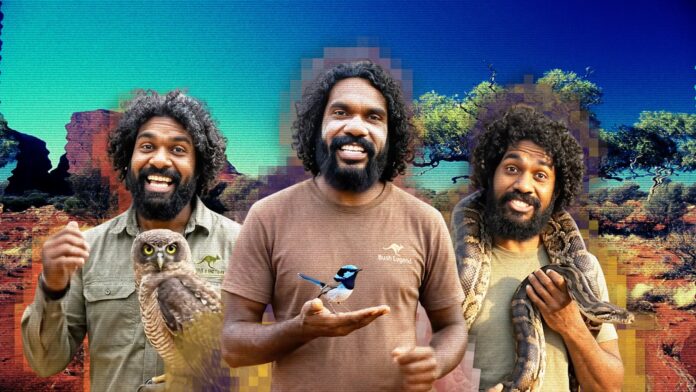

A viral TikTok personality known as “Bush Legend” – presenting as an Aboriginal man sharing native animal facts – is entirely AI-generated, sparking debate over “digital blackface” and the unchecked use of artificial intelligence to represent Indigenous cultures. The account, active across TikTok, Facebook, and Instagram, uses AI-created visuals of a man in traditional attire alongside didgeridoo-inspired music, misleading many viewers into believing they are engaging with authentic Indigenous content.

The Illusion of Authenticity

The account’s creator explicitly states the content is AI-generated in the user description, but this detail is easily overlooked by casual scrollers. Many videos feature AI watermarks or captions acknowledging their synthetic nature, yet a significant portion of the audience remains unaware. The fabricated persona has garnered positive reactions, with some comparing the AI’s “energy” to that of Steve Irwin. However, this enthusiasm is directed at a completely artificial construct.

Lack of Accountability and Cultural Respect

This phenomenon highlights a growing trend: AI being used to simulate Indigenous peoples, knowledges, and cultures without any community accountability. While the account itself lacks direct harm, it contributes to an environment where racist commentary thrives alongside it, with some users praising the AI persona while simultaneously denigrating real Indigenous individuals. The creator, based in New Zealand, has no apparent connection to the Aboriginal or Torres Strait Islander communities whose likeness is being exploited.

The creator’s dismissive response – “If this isn’t your thing, mate, just scroll and move on” – fails to address the underlying ethical concerns. The insistence on using an Aboriginal-coded likeness for generic animal stories raises questions about intent and reinforces the problem of extractive appropriation.

Indigenous Cultural and Intellectual Property at Risk

Generative AI presents a new threat to Indigenous Cultural and Intellectual Property (ICIP) rights, expanding beyond education and governance into unregulated digital spaces. The lack of Indigenous involvement in AI creation and the environmental cost of AI infrastructure further exacerbate these issues. The national AI plan offers little in the way of meaningful regulation, leaving Indigenous self-determination vulnerable.

The Rise of AI “Blakface”

The ease with which AI can now fabricate Indigenous personas facilitates a new form of “AI Blakface”—shallow, stereotypical representations lacking cultural depth. These AI-generated figures often wear cultural jewelry or simulated ochre paint, appropriating sacred practices without understanding or respect. This algorithmic settler colonialism perpetuates digital violence against Indigenous peoples, allowing non-Indigenous entities to profit financially from stolen knowledge.

What Can Be Done?

Increasing AI and media literacy is essential for discerning real from fake online. Supporting authentic Indigenous content creators – such as @Indigigrow, @littleredwrites, or @meissa – is crucial. Before engaging with online content, ask yourself: Is this AI-generated? Is this where my support should go?

The unchecked use of AI to simulate Indigenous cultures represents a dangerous escalation of digital appropriation, diminishing self-determination and reinforcing harmful stereotypes. Critical engagement and support for real Indigenous voices are vital to counter this trend.